SQL Server in Microsoft Azure: How to gain performance by flexibility and save costs at the same time

This is the first time that I am tackling Microsoft Azure in an article. (Microsoft.com: What is Microsoft Azure?)

A plethora of services is provided by Microsoft Azure by now, of which the hosting of virtual machines running on an SQL Server service is one. This is what we call IaaS (Infrastructure as a Service).

– Here, this service model will be explained in more detail and, among others, compared to the PaaS approach: Which Windows Azure Cloud Architecture? PaaS or IaaS?

– A nice comparative graph is available in this blog article:

Windows Azure IaaS vs. PaaS vs. SaaS

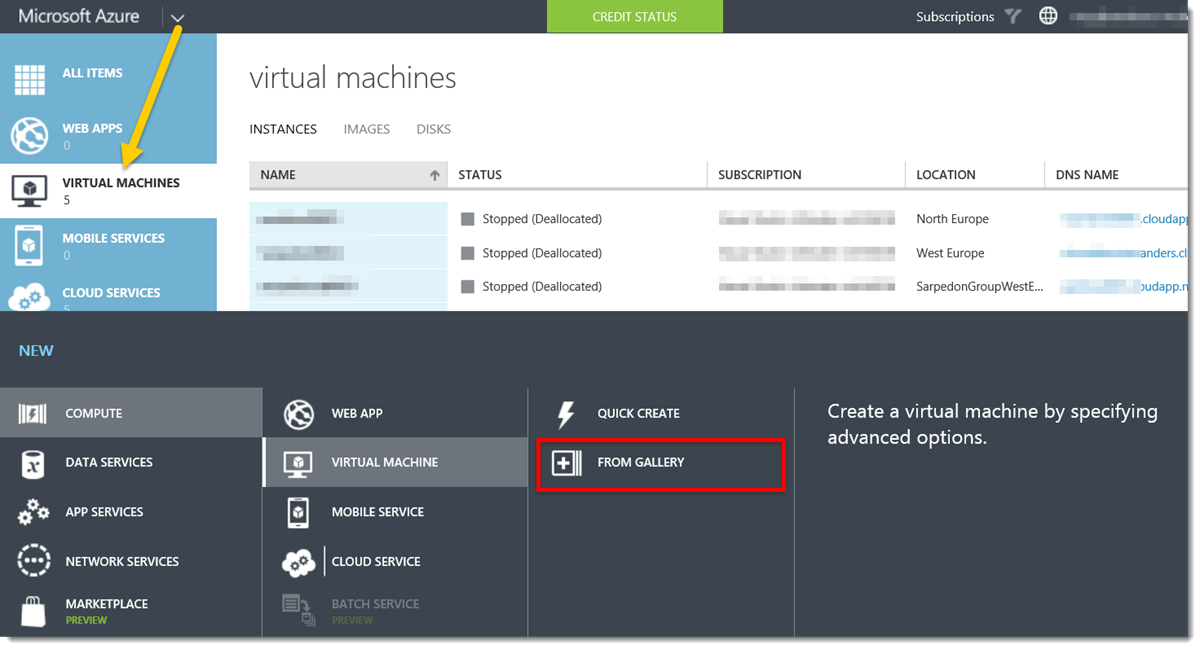

After determining that the concept IaaS makes sense for part of one’s own environment, the issue of the SQL Server Systems configuration will be next. In the Azure Portal ready-made images are available that will facilitate access especially for starters.

– In fact, with only 7 clicks it is possible to set up a Windows Server including a licensed SQL Server when using a template from the gallery.

However, for the productive application of SQL Server some more efforts are required, as the standard templates merely contain a data disc in which the operating systems is located. – Yet there are several reasons as to why one should not run one’s SQL Server here: data integrity and IO performance.

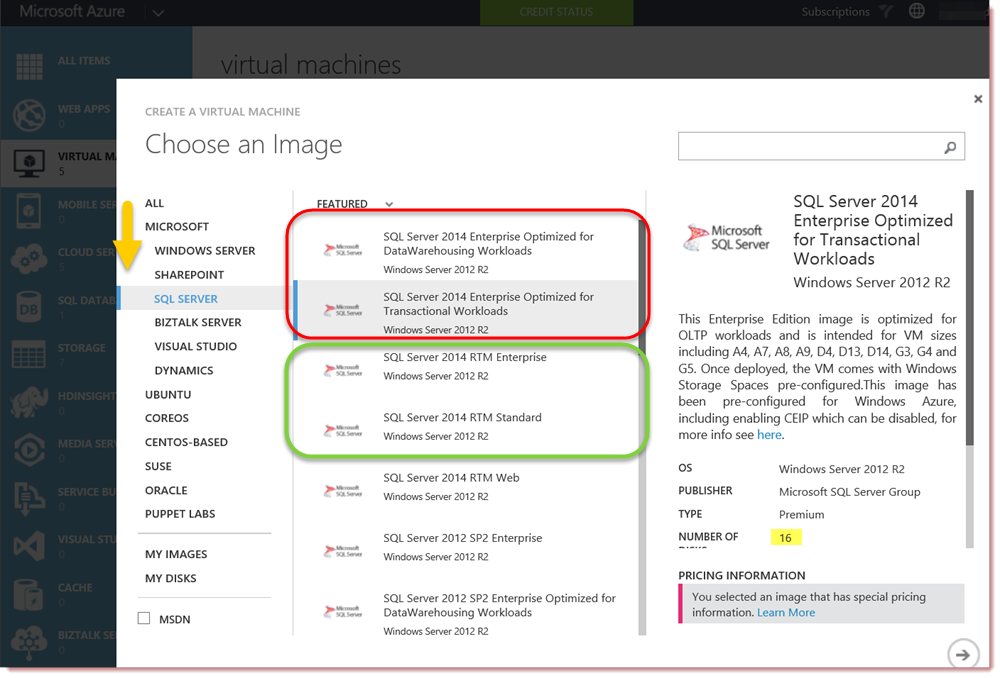

Therefore, Microsoft also provides so-called “optimized” images – for OLTP or OLAP scenarios – (highlighted by red frame in the screenshot) that immediately come with 15 more data discs making a total of 16.

1) Option 1, the “traditional approach” hence is: several data discs and a machine with corresponding support/CPU power.

The 15 data discs (additionally to the OS disc) in this case are pre-configured for SQL data and SQL logs in 2 storage pools of 12 and 3 discs each.

The maximum IOPS also depend on the available CPU cores. One should not expect linear performance increases anyway.

The problem here is: You lose almost any flexibility, i.e. in terms of configuration level (performance) and ultimately also in terms of pricing.

Because in order to use the total of 16 data discs it is necessary to consistently operate one of the 8-core VM sizes (A4, A7, A8, A9, D4, D13, D14, G3, G4 and G5). It is only possible to scale upwards.

– If you need more than 16 additional data carriers you will have to apply a VM with 16 or 32 cores (G-series), which will enable you to apply up to 32 or 64 data discs besides the OS disc.

In terms of pricing, this will definitely set the limit downwards.

This, however, makes it more difficult to reach the goal of saving costs through cloud-based systems.

Yet the great strength of the cloud-based approach is ideally only when requiring a specific performance (or service) to request and receive it, and when not needed, to not leave it idly “activated.” For you only pay for what you “subscribe” to, which in this case is not necessarily what you actually use.

Our ideal system, thus, should be maximally scalable, both upwards and downwards.

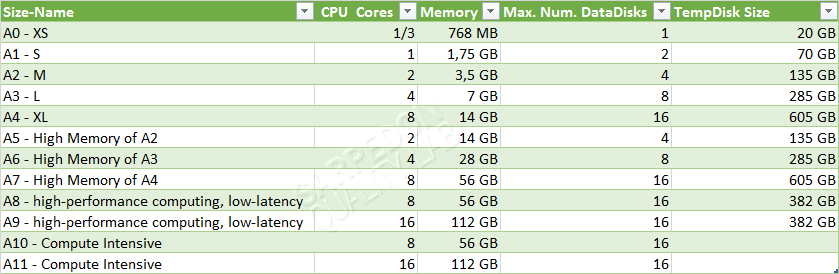

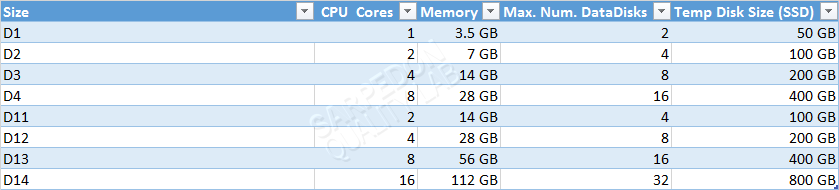

This will probably become clearer if you look at the charts with the current (status: 30 April 2015) virtual machines and their performance core sizes.

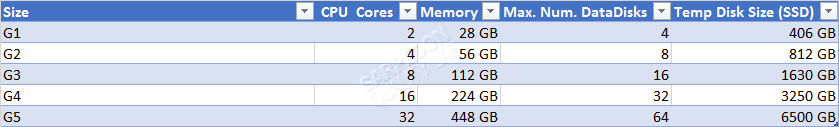

At the moment, the standard tier comprises 3 series: A, D and G, with the G-series being that of the greatest power – and hence the most expensive.

You can see the number of dedicated CPU cores, the size of the working memory, the size of the temp disc, and, very important for the scalability: the number of the maximally permitted data carriers besides the OS disc itself.

Per data disc you get up to 500 IOPS. To receive more IOPS, thus, you need more data discs – but also more CPU cores. However, when using a machine with 16 data discs it will hardly be possible to scale downwards in times of low utilization rates. An A2, for example, will thus be unreachable for some kind of minimum operation. If you need more data discs to be able to accommodate IO peaks you will restrict yourself further and will have to continually pay for the most expensive machines.

Are there any alternatives? How to be flexible in order to save costs and at the same time switch to higher machines (“scale-up”) if necessary, and on the other hand restrict your machines to minimal CPUs during nighttime or on weekends (“scale-down”)?

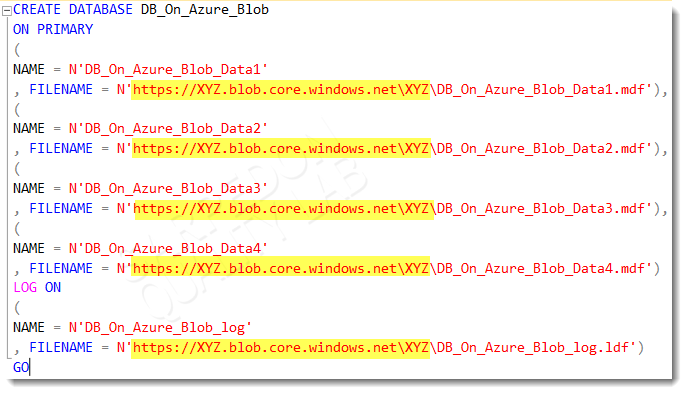

1) Saving data files directly on the Azure Blob Storage

The obvious advantage here is that data discs are not required – and these are what significantly limit scaling options. Instead of the data discs, the SQL Server database files are stored directly in the Blob storage.

The database creation can look something like this:

This option is supported since SQL Server 2014.

Here, too, you get 500 IOPS per file, with a general limit of 20.000 per storage account.

Here you can find a detailed example of the setup including a code:

Create a SQL Server 2014 Database directly on Azure Blob storage with SQLXI

The disadvantage of this option is, in my view, the complexity. The approach to creating the Shared Access Signature required for accessing the Blob container is not really trivial.

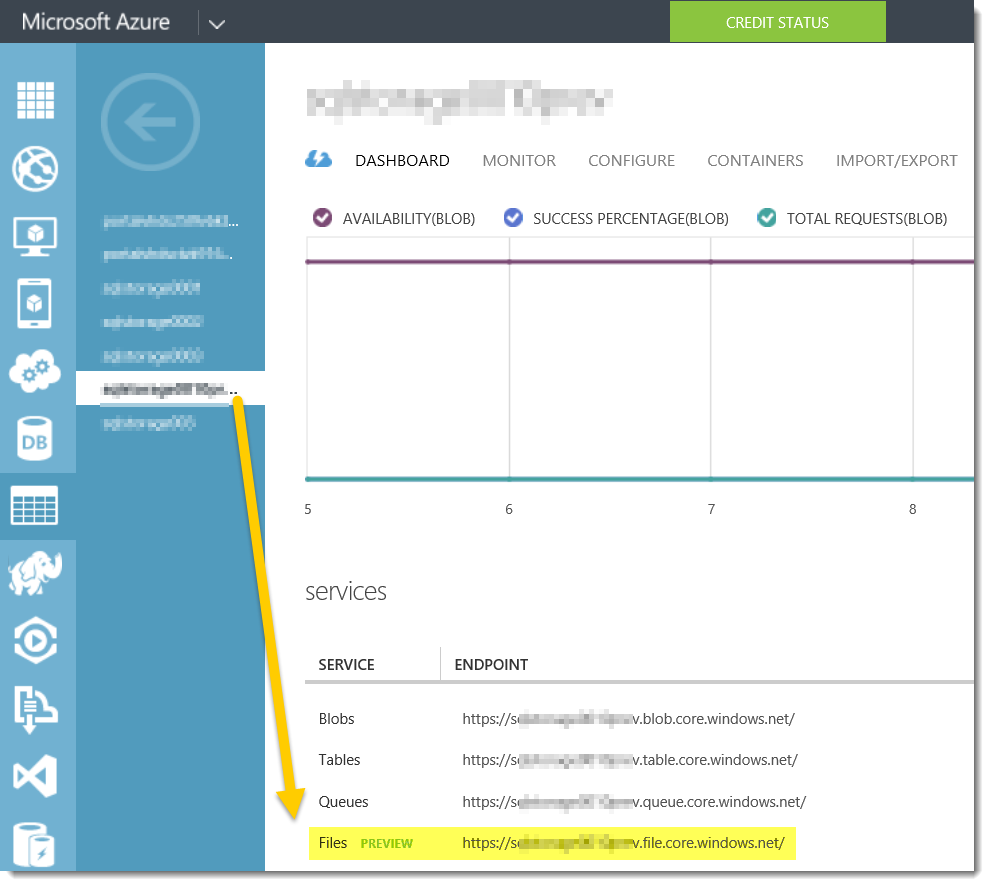

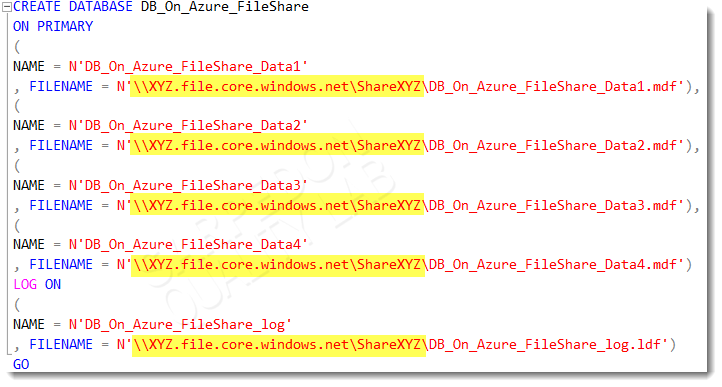

3) Storing data files on an Azure File Share

Since May last year, the Azure file service (Introducing Microsoft Azure File Service) has been available as preview feature.

In addition to “real” directories, this service also supports releases on the basis of SMB 2.1.

Here we get a maximum of 1000 IOPS per share. I.e. for the same amount of IOPS only half as many Data Files are required as for the direct access to Azure Blobs.

It is important to start by activating the preview feature for a storage account.

Next, you create the necessary shares per PowerShell and distribute your database files on them.

The limit of a maximum of 20,000 IOPS per storage account also applies here.

But in my view, the access is significantly easier.

A disadvantage of this option is that you have to make sure that the SQL Server service starts only when the shares are available via network.

Aside from occasionally occurring access problems that can probably be attributed to the preview status this combination, as I see it, is the easiest one to administrate – once you got used to the automatically controlled delayed service start.

The Azure File service is currently offered with a 50% discount and is thus at around 20% below the Azure Blob storage Prices.

The advantage of the latter two options is evident: you are not bound to a particular configuration level of the system but you can start your SQL Server on a machine with far more or also far less CPU cores at certain times.

One final remark on the transaction log:

There is only one log file per database (several log files would be written to sequentially and not bring any performance advantages). There, the immediate benefit is that file shares deliver 1000 IOPS instead of 500 IOPS. If this is not sufficient, only the traditional approach combined with Windows Server Storage Spaces remains, unfortunately: striping of several data discs for the transaction log with the according scalability disadvantage.

I hope this article made somewhat tangible what to me is the biggest advantage of the Cloud-based approach through the example of SQL Server. As soon as you get used to the Cloud’s “service concept” and leave behind traditional thinking patterns like “I need an x-core machine” you can build very cost- and performance-efficient systems by combining different services, such as, as demonstrated above, virtual machines and file services.

Of course, IOPS are not always the ultimate performance indicator. I have chosen them over MB/sec for simplifying reasons alone and without taking into account the request-size. In general, the values are to be understood based on 4-K sequential reading requests. This applies to all storage mechanisms that have been addressed here and should therefore suffice for the purpose of comparability.

Those who are interested in further dealing with this topic are welcome to join the free one-day SQL Server conference SQLSaturday Rheinland on 13 June in Sankt Augustin.

On 12 June, the day before, there will also be a free PreCon, Hybrid IT Camp: Azure Szenarien & die eigene flexible Infrastruktur für jedermann (“Azure scenarios & individual, flexible infrastructures for everybody”) (Short Link: http://bit.ly/sqlsat409hybridit), which I will be running with Patrick Heyde (Blog/Twitter) from Microsoft.

Below is a list for further reading:

- Azure Subscription and Service Limits, Quotas, and Constraints

- Azure Storage Pricing

- Create a SQL Server 2014 Database directly on Azure Blob storage with SQLXI

- Introducing Microsoft Azure File Service

- How to use Azure File storage with PowerShell and .NET

- Microsoft Azure Preview features

- Best Practices & Disaster Recovery for Storage Spaces and Pools in Azure

- New VM Images Optimized for Transactional and DW workloads in Azure VM Gallery

Have fun on Azure cloud

Andreas

P.S.: A big “thank you” goes to Patrick Heyde for his valuable tips and mentoring in Microsoft Azure – I, too, had to get used to a new way of thinking 🙂

Leave a Reply

Want to join the discussion?Feel free to contribute!