Tracing with XEvents in Azure SQL Database

A step-by-step guide.

Being Developer, Administrator or simply Support for an Azure SQL Database based Application, there are those moments when the Azure-provided Dashboard-capabilities are not sufficient and you need to conduct a throughout trace of certain statements occurring.

Sometimes you will need:

- superior filtering in the source

and the ability to

- group

- aggregate

- dice/filtering in the collected data

..your data to find problematic queries.

Or you are interested in events that may not be the queries themselves to answer questions like for example:

- “What prevented adaptive query plan usage?”

- Event: adaptive_join_skipped

- “how exactly did the tuple mover process for my columnstore Index run?”

- Possibly interesting events:

- columnstore_tuple_mover_begin_compress

- columnstore_tuple_mover_begin_delete_buffer_flush

- columnstore_tuple_mover_compression_stats

- columnstore_tuple_mover_delete_buffer_flush_requirements_not_met

- columnstore_tuple_mover_delete_buffer_truncate_requirements_not_met

- columnstore_tuple_mover_delete_buffer_truncate_timed_out

- columnstore_tuple_mover_delete_buffer_truncated

- columnstore_tuple_mover_delete_buffers_swapped

- columnstore_tuple_mover_end_compress

- columnstore_tuple_mover_end_delete_buffer_flush

- Possibly interesting events:

- or when did the garbage collector of my memory optimized table occur and how many rows did it remove?”

- Possibly interesting events:

- gc_base_generation_evaluation

- gc_base_generation_updated

- gc_cycle_completed

- gc_notification

- Possibly interesting events:

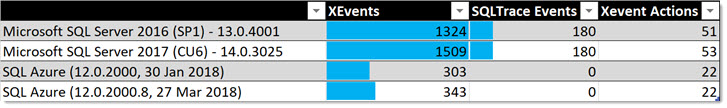

If you hoped to use SQLTrace/Profiler for Tracing, from looking at this list alone you can tell there is no way for this old, outdated tool from the last decade in Azure SQL Database. 😉

(yes, a bit harsh, but it helps to remember that XEvents were introduced back in SQL 2008 and the SQLTrace technology has been in a coma waiting to get unplugged from life-support since SQL 2012 when XE received a superior GUI and all missing Events. You can read more here: andreas-wolter.com/en/extended-events-vs-sql-trace/ )

First conclusion: The architecture of SQLTrace is simply not supported in Azure SQL DB.

So, on to Extended Events.

When you are connected via SQL Server Management Studio to your Azure-hosted Database, you will see “Extended Events” inside the database-scope

From there you can use the GUI to create your session, just as on-premise.

You will notice that the option to start the live target viewer is greyed out. That’s because this target, not even the plain “event_stream” and “compressed_history”-targets, isn’t supported in Azure.

Causality tracking, which is based on certain private “actions,” is supported though.

You may also notice that less templates than on-premise are included. Mainly the “Profiler-equivalents” but also some others are missing.

But who cares… I myself have never used any of them since they were introduced back in SQL Server 2012. The DDL is already so simple to use.

Next you decide for your events, filters, and if necessary, actions.

At the time of this writing, the last Update of SQL Azure DB from March 27/2018 offers 343 traceable events.

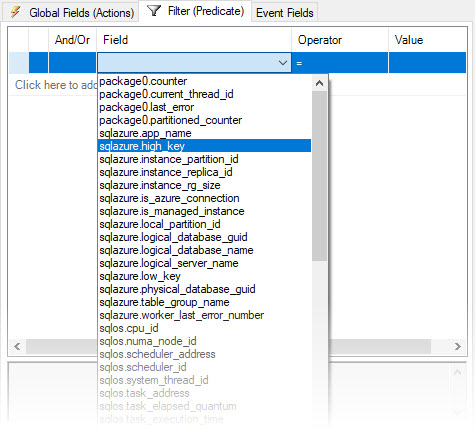

The interesting part about the filters/predicates is, that there are a couple of Azure-specific predicates, from the “sqlazure”-package:

The list of special azure-predicates as of today is:

| Predicate | Description |

| logical_database_name | Get current database name. |

| logical_server_name | Get current server name. |

| is_azure_connection | Get whether current session is an azure connection. |

| is_managed_instance | Get whether this is Managed Instance. |

| local_partition_id | Get the current local partition id. |

| app_name | Get the app name of the current partition. |

| table_group_name | Get the table group name of the current partition. |

| low_key | Get the low key of the current partition. |

| high_key | Get the high key of the current partition. |

| worker_last_error_number | Get the last error number from the current worker. |

| logical_database_guid | Get current database logical GUID. |

| physical_database_guid | Get current database physical GUID. |

| instance_partition_id | Get current sqlserver instance winfab partition id. |

| instance_replica_id | Get current sqlserver instance winfab replica id. |

| instance_rg_size | Collect instance RG container size. |

Those can come in very handy!

Then you will need to decide for a target. Here we are really limited: All that we can choose from is:

- event_file

- event_counter

- ring_buffer

Now I am not sure this choice makes a lot of sense. Why the event_counter-target, why not histogram or pair_matching? From the extensive benchmarks I have done (Performance overhead of tracing with Extended Event targets vs SQL Trace under CPU Load), I know that the counter-target really does not have any less overhead than the file-target. And also, technically a file-target can easily give you a count. What I do not understand is, why Microsoft did not provide the pair_matching target. That one has capabilities that no other target has! And it’s not more complicated to support. I can only guess that this was based on real-world usage, and I find very few people who actually know about that special target’s advantages. Too bad.

The Ring-Buffer target can be parsed for further analysis via XQuery. I have an example here: How to import Extended Events session event_file target and parse deadlock-graph

The File-Target in Azure SQL Database

While the file-target is the easiest one to use on Premise, in Azure SQL Database there are some obstacles. This is because you do not have a concept of local storage.

You need to use a path to a storage container. And for that you need a Storage Account and somehow allow the database to write to it.

The steps to set up a file-target are:

- Set up a storage account

- Create a container

- a. Get path

- b. Get Shared Access Signature (rwl)

- On the Azure SQL DB create a master encryption key

- Create credentials in the database to access the storage account

- Configure Extended event session with the complete target URL

- Start the session

- Analyze the data

Step 1) Set up a storage account

For all of this you can use Powershell.

But I am going to show the manual approach via the Azure Portal. There you go to storage accounts and create a new one. Make sure to select “general purpose v2” and not v1 storage. For the other options chose your individual needs.

Step 2) Create a Container

Inside the storage account you have the option to create containers:

Once this is done you need to:

Get Path and Shared Access Signature

From here on I suggest you use Azure Storage Explorer – or Powershell altogether to create further folders (optionally) and get the Shared Access Signature (SAS)

In Storage Explorer you can right-click on the container and take it from there:

Create the Access Policy and get the Shared Access Signature:

Permissions: rwl

Besides the URL you need the string after the question mark, which is your Shared Access Key. It will look similar to this:

st=2018-04-07T17%3A00%3A00Z&se=2018-06-10T13%3A45%3A00Z&sp=rwl&sv=2017-04-17&sr=c&sig=XDQNl%2BLg7aTadsdwSk9zFT2Ri127Ad1v%2Fg59yJfRR2mE%3D

Once you have that you can go back to SSMS

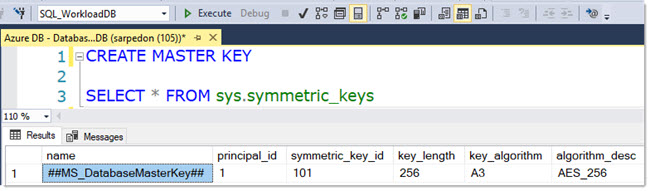

Step 3) Create a Master Encryption Key

Before we can create a Credential with the Shared Access Key, we need to have a database master key to protect that entity.

You create a master key with this simple command:

CREATE MASTER KEY

You do not need to specify a password for our purposes. This server is managed by the Microsoft Azure platform.

Step 4) Create a Credential

Now we need to create the Credential which will contain the Shared Access Signature to access our prepared storage account.

Note: This command will only work on Azure SQL DB:

As you can see you can create multiple Credentials on your database.

Step 5) Configure Extended event session

You enter the filename in the format https://StorageAccount.blob.core.windows.net/Container/FileName.xel

I would advise to include the Database-name in the Filename, unless you want to have a container for each. So in my example it will be: https://sarpedonstore.blob.core.windows.net/azuredemos/xetrace/Workload_DB_XE_Azure_Demo.xel

In the GUI you can see the Credentials that exist:

The DDL-statement for the session looks like this:

Step 6) Start the session, run workload, stop session

Self-explanatory 😉

(you can use the GUI or T-SQL or Powershell)

Step 7) Analyze the data

Once you are done and want to analyze the captured data there are 2 ways: SSMS Extended Events Viewer, or T-SQL using sys.fn_xe_file_target_read_file.

Using the fn_xe_file_target_read_file-Function you will notice, that different to the local file target the storage container URL only accepts exact file name matches – no wildcard. This makes working with the File-Target extra inefficient.

I also show how to parse the file target here: How to import Extended Events session event_file target and parse deadlock-graph

Because of this limitation though, I recommend downloading the files to a special monitoring server (in Azure) and taking it from there.

Download the file to a Monitoring Server

To download the file I recommend using Azure Storage Explorer

Now you can analyze the file locally in SSMS.

The first times this procedure will be very clumsy and slow until you develop a routine and automation for all of those steps. I hope this guide helps you get quick start with Extended Events in Azure SQL Database.

Happy Tracing

Andreas

Leave a Reply

Want to join the discussion?Feel free to contribute!