Optimizing workflows with In-Memory and Natively Compiled Objects in SQL Server

– or how it does not work

During the course of optimizing data-access-code with the In-Memory-functionalities in Microsoft SQL Server (memory optimized tables + indexes, memory optimized table valued parameters and natively compiled stored procedures), you may often realize that sometimes you go one step forward and then another one backwards.

As often: the devil is in the details.

Take the sample-scenario of a stored procedure that has a workflow that can be summed up like the following:

- Create a Temporary Table (#)

- Insert a batch of data, ~200,000 rows

- Update the Temptable using a join to another (disk-based) table (Dimension)

- Update the Temptable further

- Delete some rows

- Insert the remaining data into a Target-Table (Fact) (disk-based)

That pattern is not so uncommon in ETL-scenarios, when loading data into Datawarehouse Fact-tables, but can be used in many other contexts.

Now one could be tempted to improve performance using Memory-Optimized Table Valued Parameters (TVP) instead of the Temptable.

- Create a memory optimized TVP (@)

- Insert a batch of data, ~200,000 rows

- Update the TVP using join to the other disk-based table

- Update the TVP further

- Delete some rows

- Insert the remaining data into a Target-Table (disk-based)

The idea behind is that in the XTP-engine one can work without Locks, even without latches, and in certain scenarios even further without any IO. (Here a bit more on that: The SQL Server 2016 In-Memory Evolution – from row-store to columnstore to in-memory operational analytics, SQL Server 2016 SP1 removes the editions’ programming feature limits! Everything from Always Encrypted to XTP/In-memory for everybody!)

The concept would be to simply replace the Temporary Table with a Memory Optimized TVP.

But the outcome might floor you: It may or even may not get a bit faster – or even a bit slower!

If there is a second table involved (i.e. Join a dimension), then maybe we need to get that second table memory optimized as well.

But still in my case you will see, it even becomes slower again!

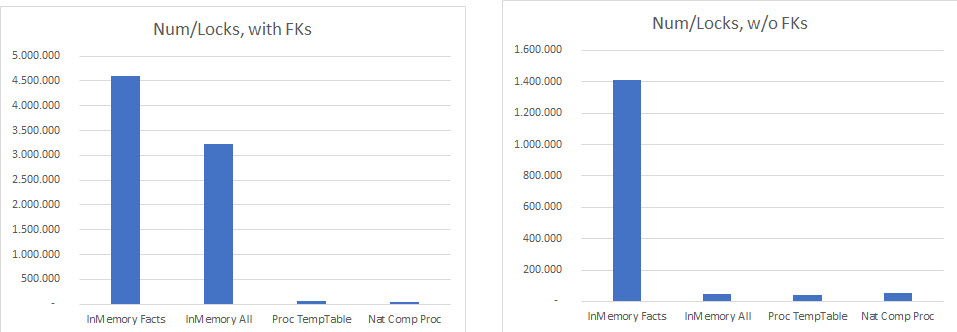

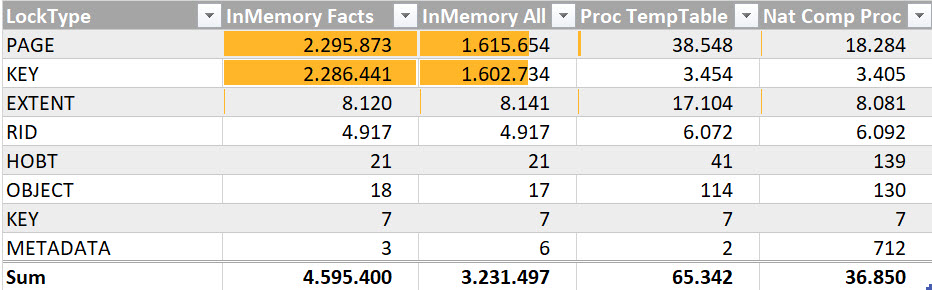

The problem lies, among other things, in the amount of locking, that is still involved:

Why on earth do we suddenly have MORE Locking by using memory optimized tables?

The answer lies in the fact that in order to get the data into memory optimized tables, we still have to read them from the disk-based tables + write back in the end (in my case even supported by a Clustered Columnstore Index – in vain…). Even more so when the memory optimized table/TVP is joining the still disk-based Dimension-table.

If you disable or remove foreign keys during this process, you will see an equal performance improvement for all the tested approaches.

So, in order to truly gain a noticeable performance improvement, unfortunately, in many cases, there is no “quick win”.

This is because as described, the bottleneck “disk” does not simply get removed, but still remains in Interop-Mode. All interactions between the In-Memory engine and the standard page-based engine slow your work down. So you really need to eliminate this as much as possible. This is why I sometimes will say: “In order to truly profit from In-Memory, you need to redesign your processes.”

This means: Get your workflow and schema(!) as much as possible purely into In-Memory!

Once you have mastered this stage, you will be rewarded with the ability to use natively compiled stored procedures.

By their very nature these cannot work with On-Disk/pages-based tables and profit from extremely optimized compiled code, which will never be re-compiled unless you absolutely want it to.

On the way to reaching that goal you will surely stumble upon one of the many still existing limitations of the XTP-engine, which does not support the same T-SQL surface as the for decades evolved standard engine.

But this improves in every version. In SQL Server 2016 you will most likely be hindered by the Errors like:

Msg 12311, Level 16, State 39, Procedure ProcedureName, Line 73 [Batch Start Line 11]

Subqueries (queries nested inside another query) is only supported in SELECT statements with natively compiled modules.

Msg 12319, Level 16, State 81, Procedure ProcedureName, Line 64 [Batch Start Line 11]

Using the FROM clause in an UPDATE statement and specifying a table source in a DELETE statement is not supported with natively compiled modules.

Msg 10775, Level 16, State 1, Procedure ProcedureName, Line 36 [Batch Start Line 11]

Object ‘SchemaName.TableName’ is not a memory optimized table or a natively compiled inline table-valued function and cannot be accessed from a natively compiled module.

And some more…

Once you have overcome it with clever re-design, you will finally profit from the true potential of In-Memory, like the ~50% performance improvement in my sample ETL-workflow.

The optimized workflow goes like this:

- Prepare a permanently available memory optimized table with SCHEMA_ONLY

- Insert the batch of data, before starting the natively compiled stored procedure

- For the Insert already join the second table and integrate the necessary data/columns to avoid a second Insert

- Inside the natively compiled stored procedure Update the memory optimized table using simple statements without Subselects or Joins

- Delete some rows directly inside the proc

- Back outside of the natively stored proc Insert the remaining data into the Target-Table (disk-based)

The limit is still the disk-based engine, as one can see in this query plan of the final code of the final Insert:

The final results and performance comparison are as follows:

There is a lot more to investigate in such a POC, which goes beyond the purpose of this article.

For example, one might notice the brutally increased amount of locking in Interop-Mode versus standard T-SQL – suspiciously identical to the number of rows being passed though…

I hope my dear readers take this as a motivation that while the path may be stony, one can get amazing results with the In-Memory engine in SQL Server. With the memory sizes that one can buy today for comparably little money (think 1.5TB per socket), I am waiting for the day that most data will simply be In-Memory from the outset. And those of you who learn about the new paradigms will be the first to profit from it.

If you are in South-Asia this summer, you are welcome to come to my full day PreCon on In-Memory technologies in SQL Server at the Data Platform Summit in Bangalore, India, August 16th:

Here a direct link: http://dataplatformgeeks.com/dps2017/pre-conference-seminars/

Happy memory-optimizing

Andreas

I tested against, v2017 [ Microsoft SQL Server 2017 (RTM-CU9-GDR) (KB4293805) – 14.0.3035.2 (X64) ], and got same error.

Msg 12311, Level 16, State 37

Subqueries (queries nested inside another query) is only supported in SELECT statements with natively compiled modules.

Later will check against v2019.

Thanks for checking, Daniel

Subqueries are not mentioned in the “What’s new in SQL Server 2019” (https://docs.microsoft.com/en-us/sql/sql-server/what-s-new-in-sql-server-ver15?view=sqlallproducts-allversions), (or 2017), so no change there.

What is coming in 2019 is the ability to configure tempdb metadata to be backed by memory-optimized tables (https://docs.microsoft.com/en-us/sql/relational-databases/databases/tempdb-database?view=sqlallproducts-allversions#memory-optimized-tempdb-metadata)

regards

Andreas

Nice one.

I googled on Msg 12311, Subqueries (queries nested inside another query) is only supported in SELECT statements with natively compiled modules.” and lucked in your post.

Thanks for heavy research on this.

I was hoping to quickly determine whether it has been addressed in a later version.

Once I have more time, I will do my own research.

Thanks for documenting some of the cobwebs.

Daniel