The SQL Server 2016 In-Memory Evolution

– from row-store to columnstore to in-memory operational analytics

SQL Server 2016 is finally available and, by extension, the “version 2” of the In-Memory OLTP Engine, if you will.

- At various international conferences and already at the IX in 2014 I have presented what is behind the In-Memory Engine of SQL Server introduced in SQL Server 2014. Only I had not yet found the time to put it into a blog article so far.

In this article I will illuminate the innovations and improvements Microsoft has been working on for the past 2 years, and which can be attributed much to customer feedback. In fact, feedback that to a great extent consisted of notes like “not practicable because this and this is missing.”

And let me say one thing before I start: in my view, Microsoft has been able to address the majority of blockers.

That means, everybody should at least consider evaluating In-Memory, and in almost all database projects there are structures that can be solved more elegantly In-Memory. – Ok, maybe not for everybody, because this feature is unfortunately limited to the enterprise edition.

The most important innovations for memory-optimized tables are:

It is now possible to define Unique Indexes as well as foreign key constraints. The latter are only possible between memory-optimized tables (and not between disk-/page-based and memory-optimized tables), and must always refer to the primary key – referring to Unique Indexes is not possible.

Moreover, NULL-values in Non-Unique Indexes are now allowed (as opposed to disk-based tables not in Unique Indexes!).

Equally very important is the support of all code pages and of non-Unicode data as well as the encryption of memory-optimized data with TDE (hence not in the main memory itself but of the data that stored on disk). *1

In my view, these were the most frequent blockers in projects in which In-Memory was evaluated, as there were hardly any practicable workarounds for this issue.

*1 Data encryption with the ENCRYPTION functions in SQL Server is not supported – this is also true for the new Always Encrypted Technology and Dynamic Data Masking.

Row-Level Security of SQL Server 2016 yet is supported. The predicates and functions must consequently be compiled natively.

Very cool, if you ask me.

A further limitation has been eliminated with the possibility of altering Memory-optimized tables afterwards.

Adding, dropping and altering columns and indexes afterwards is supported. Instead of CREATE/ALTER/DROP index it must now be used ALTER TABLE, since in Memory-optimized tables indexes are part of the table definition (and are being compiled in its entirety).

It is particularly important here that it is now also possible to change the bucket count of Hash-indexes which during operation may naturally change considerably over time.

This is how it looks in a sample code:

Statistics can also be updated with SAMPLE instead of FULLSCAN, and, above all, automatically as well.

Data type: LOB datatypes such as varchar/varbinary(max) are supported and stored “off-row.”

Important, newly supported T-SQL functionalities within Natively compiled Stored Procedures and, brand new, Functions, are: the OUTPUT clause, UNION and UNION ALL, DISTINCT, OUTER JOINs, subqueries.

Moreover, natively compiled procedures can now also be changed with ALTER PROCEDURE. In this way, they will naturally be stored compiled in the new shape in the last step.

In order to facilitate a new implementation plan in the case of changed statistics one can now also executive sp_recompile against natively compiled procedures (and functions).

Performance, too, was further tweaked. As a result, memory-optimized tables and Hash-indexes can now (in InterOP mode) be scanned simultaneously. In the IO area, the entire checkpoint process was reviewed and the data files can now be read and written with multiple threads, which may result in an almost tenfold increase of the throughput (if the IO-subsystem keeps up with it).

Columnstore Technology

What has been going on in the other Storage-Engine “Vertipaq”, integrated in SQL Server since 2012, with the Columnstored Indexes? These are also Main-memory optimized, but with an entirely different objective:

Storage space optimization and efficient OLAP-style queries.

The innovations here are very essential:

Both Columnstore Index Types, Clustered and Nonclustered, can now be updated!

Additionally, Columnstore Indexes can now be extended with further traditional btree-indexes. This is important, as not every query really profits from the Columnstore storage form. This gain in flexibility is a decisive advantage over the previous releases and cannot be emphasized enough.

And something else is now possible: Nonclustered Columnstore can be created with a filter.

By means of new techniques the following problem can be solved, for example:

A table with sales transactions is filled by small inserts at intervals of seconds.

At the same time, one would also like to provide various reports on day and daytime aggregations. Maximally up to date of course.

The problem typically lies in the fact that one has to decide between indexes for all report queries and those that are minimally required for possible updates. Inserts viewed in isolation do not require any indexes.

This combination results in the OLTP tables overloaded with many indexes, which I frequently discover during my work and that then need to be “optimized” (removed).

The possibility to create a Nonclustered Columnstore Index in addition to the Clustered Index does not only save Indexes (because the Columnstore Index can cover every necessary column), but with a smartly applied filter the Index-Overhead can also be avoided that would otherwise affect the actually more important inserts.

The mixing of OLTP and OLAP queries are one of the most typical problems in databases, and these new possibilities are thus simply a dream for database architects.

This is how it will look in code:

40% Performance-Improvement in TPC-H Benchmark

In terms of performance, these improvements have made the SQL Server 2016 pull ahead of SQL Server 2014 by close to 40% more QphH (Query-per-Hour Performance Metric) in the TPC-H Benchmark. You can see in the screenshot that the Benchmark was sent in on 9 March 2016 and really was achieved on the same hardware as under SQL Server 2014 on 1 May 2015.

Further important improvements for Columnstore include the support of the SNAPSHOT Isolation Level (and RCSI), which is especially important to Read-Only Replicas of Availability Groups, as well as online-defragmentation and various analysis enhancements.

The highlight: Real-time Operational Analytics & In-Memory OLTP

The absolute highlight however is surely the combination of memory-optimized tables and ColumnStore Indexes.

As a result, two features that are optimized for entirely opposing query types – OLTP and OLAP – are merged.

Technical facts

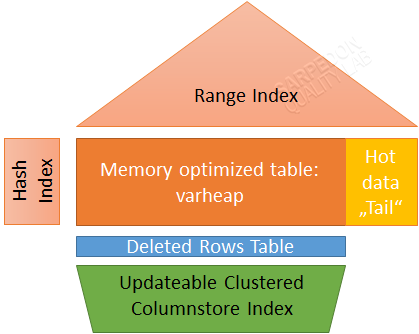

In technical terms, a Clustered Columnstore Index is applied. As can be seen in the image, it omits the “hot-spot” of the data in order to prevent the overhead through the double data storage in case of alterations and the potentially quick succession of inserts in this area. In addition to the implied Delta Rowgroup (in the image: Tail) that is covered by the memory-optimized index, there is a “deleted rows table” for deleted data. Both areas are asynchronously compressed/added to the CCI according to the Columnstore Index standard threshold value of 1 million cells.

At this point, let me add another note: the maximum data amount that can be stored per database in (durable) memory-optimized tables has now been eliminated, too!

As a result, according to the current technical state, in theory up to 12 TB (less a maintenance overhead) can be stored in XTP-memory under Windows Server 2016!

In terms of structure, it would look like this:

And applied in code, it would look like this:

The outcome now offers the best from both worlds: high performing inserts/updates/deletes and singleton-queries, and at the same time high performing analytic queries that handle many millions of cells at once – and in fact at the same time in the same table!

One restriction in querying is that the Columnstore Index in memory-optimized tables only works in the InterOP mode – thus not in natively compiled procedures.

Which leads us to the last Topic:

Open points, missing feature support

There are of course still a number of features that have been taken over into SQL Server due to the decade-long development of the SQL language, but which have not made it into the new XTP Engine yet. This is not just because the latter is “simply new” but also because due to the completely different architecture of this engine, which is radically tailored to In-Memory, there are several significant differences vis-à-vis the traditional database engines.

Personally, I miss the following features the most:

- DBCC CHECKDB/CHECKTABLE for memory-optimized tables

- CASE-Statement

- Filtered Indexes

- CTEs

- Replication

- OFFSET-Operator

- Ranking Functions

- DDL Trigger for CREATE/DROP TABLE and CREATE/DROP PROCEDURE

- TRUNCATE TABLE

- DATA_COMPRESSION

- Data type datetimeoffset

For the complete list, visit:

Transact-SQL Constructs Not Supported by In-Memory OLTP

Call to Action

Even though the list of missing feature/function support is still quite long, only few really make full use of these features. And for most of the remaining “blockers” there are actually quite good workarounds, be it in the form of a different architecture or in code terms. One has to bear in mind that the In-Memory tables do not necessarily make sense for all scenarios, but rather for the top-affected tables. And as for the latter, one should already have put some effort into the design anyway.

In general, I firmly believe that in almost every database project there are some instances that may profit from In-Memory functions.

Why can I be so sure?

Already since SQL 2014 it has been possible to use memory-optimized table variables aside from memory-optimized tables. And using these, in turn, many temptable-constructs can be replaced. Now that does not necessarily result in higher performing applications right away, but it is a good way to start dealing with In-Memory in terms of code and to slowly but surely start programming with it. A further “Quick-Win” can often be found in data warehouse architectures in the so-called “staging area,” as it is frequently being applied in traditional DW-systems at the moment.

And it is via these “gateways” that you have will have ended up in the “In-Memory world” before you know it.

Cu In-Memory

Andreas

PS:

If you are in India in August and want to advance your skills in those new technologies there is still a chance to get a seat in the Precon “Present and Future: In-Memory in SQL Server – from 0 to Operational Analytics Master” at SQL Server Geeks Summit in Bangalore on August 10.

Leave a Reply

Want to join the discussion?Feel free to contribute!