Use TLS 1.2 and trusted certificates to encrypt data in transit for all SQL Servers, including development environments

Handshake protocol: Server Hello

Content type: blog article

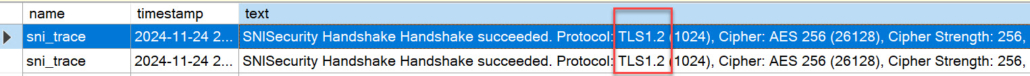

Version: TLS 1.2 (0x0303)

Cipher Suite: TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

*1 you find the explanation at the bottom of this post

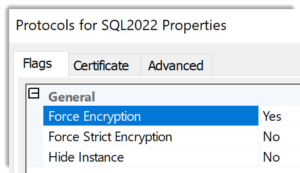

If you are responsible for a SQL Server environment, you may have noticed the following options in the service’s network configuration:

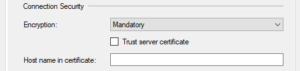

And if you work with SQL Server regularly, use SQL Server Management Studio, Azure Data Studio, or are writing applications that connect to a SQL database, you have probably seen a dialog such as this one:

Or you might have seen connection strings in your application code that look like this:

Encrypt=Yes;TrustServerCertificate=No;”

– or well, at least I hope it is set to “No” 😉

This leads to the topic of this article: We need to make the use of proper channel encryption a standard for every environment. And simply “trusting” any certificate without proper validation is not the way.

These options determine the security of your connection between the client and SQL Server and which version of TLS (Transport Layer Security) will be used for encryption.

All big cloud vendors have been pushing the use of TLS 1.2 in the last two years.

The reason is the government standard FedRAMP, which demands that TLS be configured with a FIPS-2-compliant cipher suite containing encryption algorithms like AES for both data at rest and data in transit.

This recent reminder by Michael Howard, a well-known software security expert at Microsoft with whom I had the honor to work on the SQL Security team actually inspired me to elaborate on this in a blog post (Thanks Michael):

Background

– skip if you know about FedRAMP already or don’t care about US government regulations

FedRAMP (Federal Risk and Authorization Management Program) is a US government program that provides “a standardized, reusable approach to security assessment and authorization for cloud computing products and services that process unclassified information used by agencies.” “FedRAMP is mandatory for all executive agency cloud deployments and service models at the Low, Moderate, and High-risk impact levels.” (source: https://www.fedramp.gov/faqs/)

This push goes back to an update to the NIST Special Publication NIST SP 800-52 Rev. 2 (Guidelines for the Selection, Configuration, and Use of Transport Layer Security (TLS) Implementations ) which specifies the minimum TLS versions that should be configured, specifically for government agencies. And as of August 2019, that is TLS 1.2. At the same time, TLS 1.3 shall be supported at least (not configured yet, if you read the fine print)

FIPS 140: The “Federal Information Processing Standard” 140 is a U.S. government standard that sets security requirements for cryptographic modules.

You can read a bit more about how FedRAMP and FIPS relate in this (slightly critical 🙂 article: Meeting the FedRAMP FIPS 140–2 requirement on AWS

TLS in SQL Server

While at this point TLS 1.3 is not fully supported by SQL Server, TLS 1.2 should absolutely be used because of multiple vulnerabilities that exist in TLS 1.1 and TLS 1.0.

There is a lot of information on the internet explaining the protocol details, security, and performance advantages, so I will not go into that. Here is a short article for starters: TLS 1.2 vs TLS 1.1

To use TLS 1.2, you need to use Certificates to “certify” the authenticity of the SQL Server Instance you are connecting to. When done successfully, your client and the database engine can set up an encrypted channel for communication.

Note on support for TLS 1.2 for older versions of SQL Server:

TLS 1.2 is supported back to SQL Server 2008, which was made possible by special security patches that Microsoft provides. You can find the list of patches for each version here: TLS 1.2 support for Microsoft SQL Server

About self-generated and self-signed certificates

SQL Server self-generates a self-signed certificate at startup if no proper certificate is installed. However, that certificate is equivalent to a self-signed certificate that any user can create. Self-generated and self-signed certificates both fail validation of the chain of trust to the root certificate.

In brief terms, this means that the certificate should be signed by an authority (the certificate issuer) that itself is signed by a root CA which must be known as trusted to the client. And that is implemented through the Trusted Root Certification Authorities Certificate Store in every windows system. – For security and scalability reasons you will usually not have a certificate signed by a root CA directly.

Security advice:

Never use your root CA directly but rather use sub-CA’s (subordinate Certificate Authority) to distribute certificates.

In versions prior to Windows Server 2022, one could place the self-signed certificate in the local “Trusted Root Certification Authorities” store to sort of emulate trust, but with Windows 2022 this hack does not work anymore.

Under Windows 2022, both types of self-signed certificates are equally un-trusted.

This means your environment needs to have either its own Certificate Authority set up, or you can use external commercial CA’s to receive the certificates you need.

Why not use TrustServerCertificate=Yes?

The answer is that this simply skips the validation phase for the connection establishment and as a result, your connection can’t be trusted.

It’s ironic, isn’t it?: Trusting the server without validation leads to non-trustworthy network encryption.

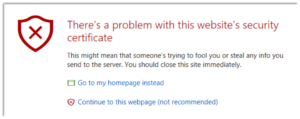

It is the equivalent of saying “continue” on a website with this certificate error.

Not only are these self-signed certificates causing a lower strength of encryption, but they are also susceptible to man-in-the-middle attacks. – You could say: by design. Because this setting literally means “just accept whatever cert the server presents”. Naturally, an attacker can present a self-signed certificate just as easily.

About development environments

Assuming that rolling out proper certificates is a no-brainer for production and test environments, what about development? Is that really necessary?

I argue: Yes. And here is why:

- Secure development starts with a secure development environment:

In the same way that developing secure code is prone to fail if the development environment works constantly with elevated permissions, this applies to working with encryption for data in transit: if the development environment uses different settings for connection security, it will have to be caught in Test. And then what is next? Fix it in test and move to prod. And with new functionalities introduced, keep doing the same over and over again? This is just pushing the problem to a different environment, increasing technical debt, variance, and in the end the chances that some unsecured client- or even server-settings make it to production are high. - Preventing lateral movement through session hijacking:

Can you guarantee that no account that logs on to your dev-environment has permissions to log on to production or test?

You will find that even domain admins will sometimes be connected to a dev environment. And if an attacker is waiting to jump from dev to prod, this is all that’s needed: a session that can be intercepted. There are multiple options for that. Using attacks on SQL via TDS injection, the attacker could for example inject his session to create a linked server on dev that points to a production server and use its current identity to then hijack the production server. - Intellectual property and configuration information are valuable targets alone:

While production data on development machines is becoming less common thanks to higher awareness nowadays (hefty GDPR fines are part of the reason), there is still a lot of interesting IP in the code. Also, process-related configuration data from development environments can help an attacker plan the lateral movement to production.

How to roll out certificates to all SQL Servers at scale

Now that we have established that really all SQL Servers should be using TLS 1.2 and certificates signed by a trusted certificate authority (CA), how can you go about that?

First of all, you will need a Certificate Authority (CA). Larger enterprises will have their own. I won’t go into detail on how to set up here as that is a whole other topic, but a few pointers I will give:

- If you have a Windows Enterprise CA, Certificate Autoenrollment will automatically deploy certificates to newly installed machines. Super helpful! – But in case you are using SQL Server Availability Groups you will still need to create your own certificates since you will probably require multiple Subject Alternate Names (SAN) for the Virtual Network Names/Listeners.

- In general, you will want to integrate the certificate handling in your deployment or post-deployment processes, so it becomes just another piece in “infrastructure as code”.

- As I mentioned above: Never use your root CA directly but rather use sub-CA’s to distribute certificates.

- For security reasons, make sure to use a different sub-CA for your development environment from your production environment.

Real-world challenge: Old clients and drivers

Now that we have hopefully realized that using TLS 1.2 and proper certificates is where we want to be, I need to also include the challenge of older applications which often use client drivers. And old always means higher security risks in software. Specifically, when it comes to encryption and network.

So, unfortunately, you may not be able to force all clients or servers to use TLS 1.2. This technical debt is just another reason, similar to clients that use hardcoded passwords, and elevated accounts like sysadmin that nobody dares to touch, to move the databases that these clients require to different servers instead of hosting them together with others who can use better security. – Because if you mix good and bad security you will end up with bad security.

Segregating by security level may be required if all else fails.

Trust me: Having worked with hundreds of clients of all sizes, I know the pain of dealing with applications that just can’t be touched, be it version-wise or security-wise. All I can do here is point out the importance of leveling up your security.

The sooner you start enforcing better security, the more debt you will prevent.

Do not wait for vendors. Instead, you need to take them with you in your undertaking of rolling out TLS 1.2 across your environment. Push or pull, however, you like to call it.

XEvent trace showing TLS Handshake with TLS 1.2

in that sense

happy securing

Andreas

*1 insider for network package analysts: this is roughly how the server responds to a session handshake request with TLS 1.2 😉

Handshake protocol: Server Hello done

TLS handshake message indicating the server is done and is awaiting the client’s response

Big thanks to:

Rohit Nayak, Principal Program Manager at Microsoft, working on network security

Ralf Dietrich, CEO and expert in computer forensics at Sarpedon Quality Lab Germany

Lars Lawrenz, CEO and head of Software development at Sarpedon Quality Lab Germany

for reviewing and helping with technical details

and Michael Howard, Senior Director at Microsoft for inspiring me to this article

Resources – further readings

This is a huge topic, as it involves different services such as the Certificate Authority, SQL Server itself, client drivers, and the multiple versions of TLS, which in turn also affect TDS.

- The Importance of TLS with SQL Server

- Certificate requirements for SQL Server

- Connect to SQL Server with strict encryption

- TLS 1.2 support for Microsoft SQL Server

- Basic guide to upgrading SQL Server and clients to TLS 1.2

- SSL errors are reported after upgrading to TLS 1.2

- Configure SQL Server Database Engine for encrypting connections

- Configure TLS 1.3 (SQL Server)

- Transport Layer Security and digital certificates

- TDS 8.0 (SQL Server)

- Driver feature support matrix for Microsoft SQL Server

- Encryption and certificate validation in Microsoft.Data.SqlClient

- Configure certificate auto-enrollment (Windows Server)

- PowerShell module: New-SelfSignedCertificate

- Root certificates of Azure SQL DB and SQL Managed Instance

Leave a Reply

Want to join the discussion?Feel free to contribute!